Introduction

Welcome to manual for Austen, the parser generator. I hope you enjoy

reading this document; it currently has no set layout, but roughly progresses through

introduction, lexical analysis, basic grammar analysis, and then advanced grammar.

This

document is primarily meant to be the minimal place where all the features of Austen are

described, if not necessarily very well.

What does Austen do?

Austen is a parser generator. That is, a programmer who needs to

read text files in a certain format, would use Austen to build parsing code

in their language of choice (in this case, it's just Java for now), as

described more simply via another language specifically designed for

describing languages. But, you probably know that if you are reading this.

The important aspects of the current version of Austen is that it

uses a language that describes parsers based on Parsing Expression Grammars (PEGs) to describe the type of

text parsed, and that the current version includes a step of tokenization

of the text first. This is significant because firstly, PEGs are neat and

are a nice intuitive way to describe a language, but in contrast to

other PEG based tools (that I know of), Austen is a bit strange in

needing seperate tokenisation. I would argue that there are advantages to this

approach, and the drawbacks are few (even for combining languages). Having said that,

I am open to expanding Austen in the future to have a “scanner-less” mode.

The other important thing to note about Austen, before getting into a

few more specifics, is that Austen uses memorisation, in the fashion

of the Packrat parsing algorithm (developed by Brian Ford – see here for more information - and general PEG/Packrat bits). It does not

have to though, and one of its features is selective control over

memorisation. The current version does not allow easy exclusion of all

memorisation, but future versions will.

Prominent features in detail

The above is a very rough and uniformative description of Austen, and

probably does not excite the reader into wanting to further their use

of this tool. So, now comes the marketing spiel, with the significant

features, as I see them, highlighted. The following is

in no particular order.

Target language independence

Okay, the current version only outputs in Java, but the grammar description

files are target language neutral, and it is planned that future versions will

generate output in multiple languages, from the same grammar specification.

Java was initially chosen, as that is the language I most often use.

Seperate tokenization

At first this may seem to not be a feature, but a deficiency. One of the

selling points of PEGs is that they are scannerless. That is, a separate

tokenisation step is not necessarily required, as the rules that specify

the semantics can just as easily do the tokenization. So, why do I force

the user of Austen to use a separate tokenization step? Well, I do not

intend to always do this, but the initial version does, and it does this

because I feel that even though something might be able to do something,

does not mean it should.

Due to the nature of memorisation it seems, to me, bizzare to push up

the memory requirements to do silly things like tokenize key words, or

to include comments in parsing, when we could skip over that by an initial

tokenisation step. Yes, we limit some power for some esoteric languages,

but such situations do not occur to me, for typical use.

The major objection will come with composition of languages, where in

one language a word is a keyword, for example, but in another it is not.

Austen eats such problems for breakfast though, as the tokenisation

allows tokens to be of multiple types (such as a keyword, as well as an

identifier), and thus the composition ability of PEGs is not compromised

(or at least not for likely language compositions).

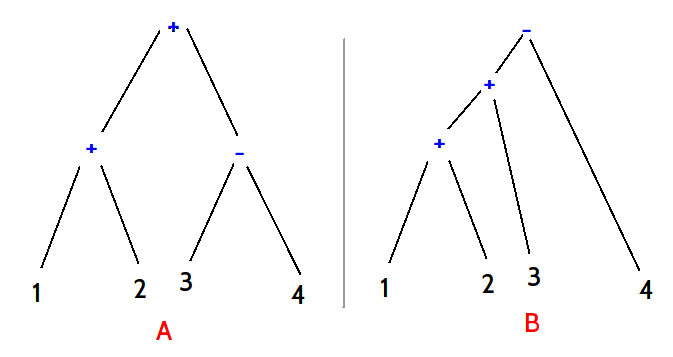

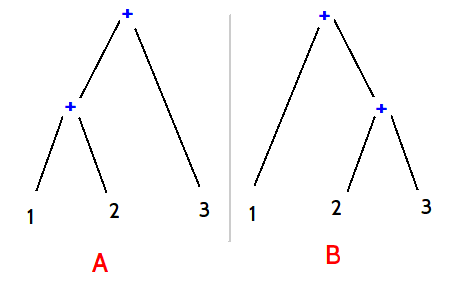

Left recursion, with priorities

One of the big plus points with Austen is that it handles left recursion

in rules. Yes, I know lots of PEG tools do that nowadays, but Austen is

significant for a number of reasons.

First, it works. For direct recursion, and indirect. And, it works whether

or not memorisation is used on a rule or not. And here is the killer, it

handles ordered left-recursion in a way that intuitive and useful, and

allows the user to neatly write left-recursive grammars, with right or

left associativity. I think this is very significant, because it makes

PEGs truely user-friendly and practical. But more on that later.

Extended PEGs

This is very cool stuff, though I'm not sure of the uses yet. Austen, as

said a number of times, is based on Parsing Expression Grammars, but extends

that specification further. All the standard operators are there, plus a

few syntactic sugar, but also the specification is extended with the

use of rule variables.

The tokenisation step assigns a type to tokens. They may be integers or

strings, or floats, or booleans. Given this, it is possible to write

grammars that interact more closely with the text being read, with

the parser interacting with read tokens, and using this to influence further

parsing. Skip to the later section on extended PEGs to get a look at what I mean.

Modular

Austen allows a modular approach to grammars (and tokenization), allow

library of useful language components to be built up.

What's new in Version 1.1

Version 1.1 has a bunch of new and exciting features. These include:

- Bug fixes to the extended PEG code. Previously there were some issues

using variables, and tagging them. This has been fixed.

- The inclusion of "start" and "end" blocks for LEX tokenising. This allows

lex style operations to occur at the beginning and end of parsing (click here

for more info).

- Lex operations extended, with the include of of "if-else" structures (click here

for more info).

- Token marking, and token reversing operations. This allows a user to mark a section of

tokens, to later be reversed (or deleted). This can be useful for intergrating right-to-left

sections within an overall left-to-right document (click here

for more info).

- User variables are allows for lex blocks, allowing parsing actions to be dependent on

user input values (click here

for more info).

- The use of user "interface" definitions, that are used in both tokenisation and packrat parsing,

to allow greater interaction with user code (click here first

for more info, and then here).

- The inclusion of system variables for getting access to line and character information

for lex tokenisation (click here

for more info).

- The inclusion of system variables for getting access to line and character information

for packrat parsing (click here

for more info).

- The ability to make non-memorisation the default in packrat parsing, with memorisation

the exception (click here

for more info).

- User variables are allows for packrat blocks, allowing parsing actions to be dependent on

user input values (click here

for more info). Mixed with the similar use with lex tokenisation allows for indentation

dependent parsing.

- The ability to provide hints for syntactic errors in the packrat parse (click here

for more info).

- The ability to match any token (click here

for more info).

Running Austen

Austen is provided as a jar (or source code for compiling if you are brave), and

as such, needs to be run with a command similar to:

java -jar Austen.jar [ options ] files

If no files are specified, then the simple graphical user interface will appear. Otherwise, if "-help" is

specified as an option then the following is outputted, and explains the available options:

Austen help (Austen --help)

Usage: Austen [OPTION]... [FILES]...

A parser generator for JAVA using Packrat Parsing, with PEG grammars, as well as lexical analysis via NFA based token parsing.

Options

--help - Show this message

-user [user path] - Sets alternative path for user files

-output [output path] - Sets alternative path for the output

-library [library path] - Sets alternative path for library files

-clean - clear target directories before outputing build results

The user must specify every file that is to produce output. Other Austen files may be accessed (via

the "import" option - see below), but unless specified, those files will not result in any generated

java code).

The "user path" is the default path for searching for files specified by "import". The

"output path" is the path where all generated code is stored. The "library path" is where files that

are shared across projects are stored.

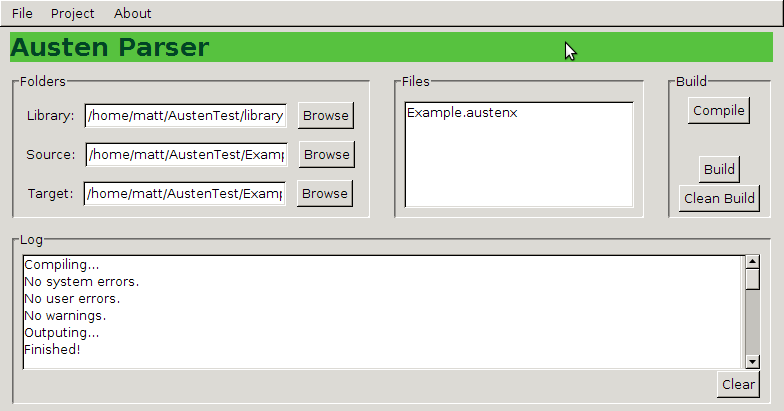

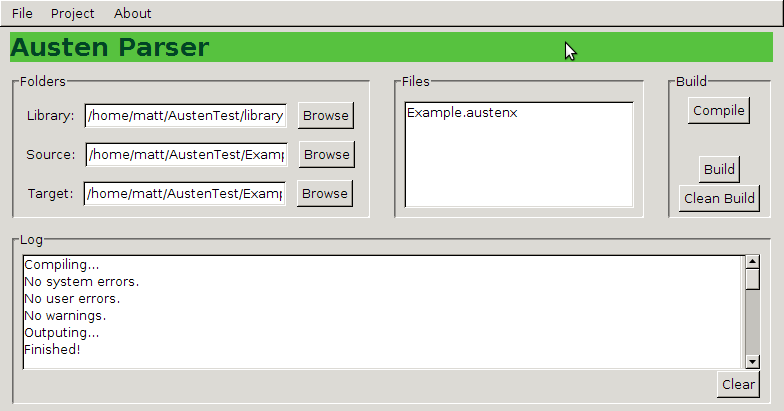

The GUI interface

If no files or options are specified (or the jar is started simply by double-clicking), then a

simple GUI interface will appear. This looks similar to the following:

Operation should be reasonably straightforward. The main task is that three folders ought to be specified (two at the minimum).

The first is the "library" folder, which contains Austen files that are shared amongst projects. The second, the "source"

folder, is the folder where all the Austen files for a project sit. The vital difference between the two

folders is that in a build, only files from the "source" folder will acutally result in the creation of

any generated code. The library code must be generated seperately, by using it as the source in another project.

The third and final folder is the "target" folder, which is the base folder for the generated code. It

ideally should be a folder that is set aside entirely for code generated by Austen.

Once the "source" folder has been selected, the files in that folder should appear in the "Files" list.

Austen files should end in ".austen", or ".austenx" (either is fine).

The buttons on the right allow the compiling and building. Compiling is just for testing. The Austen files

will be read and interpreted, and any areas can be noted, but no code will be produced, even if there are no

errors.

The two build options do all that "Compile" does, but also produce code, if there are no errors. The

difference between the two is that "Build" overwrites files in the target folder, and leaves any generated files

that were there previously, but are not part of the new set of files. This can happen as a project changes, as

Austen produces a number of non-user files that are named in a sometimes inconsistent way. Using "Clean Build"

will result in the target folder being cleared of all files first (use wisely!).

The Log

The Log informs the user of the compilation/build progress (sorry, no fancy progress bars yet). The

output will be self-explanatory, though sometimes the errors given may be a bit strange (the error logging system

needs a bit of work), they should direct the user to problems reasonably well. Use the "Clear" button to clear

previous output.

Projects

There is support for a simple form of project. Once folders have been set up, they can be saved as a project

by using the "Save"/"Save As" option in the "File" menu. As expected, use "Open" to open a previously saved project,

and the "Recent" menu to quick load previously saved projects.

File format

There are a number of different types of parser objects defined within an Austen file, including tokens, lex,

and peg blocks. There is no special file for each, and all can occur in one file. An example file might look something

like this:

output example.parser;

tokens ExampleTokens {

...

}

lex ExampleLex tokens ExampleTokens uses ExampleDefs{

...

}

define library ExampleDefs {

...

}

peg ExamplePEG tokens ExampleTokens {

...

}

The above example shows the basic layout where all the parsing components are in the same file. The order of

declaration, in general, is not significant. The most

important line to take note of, before the finer details of the bulk of the file are examined, is the

first line:

output example.parser;

The Output Declaration

The output declaration specifies package information for where the generated code will go. This is

the most language specific part of Austen, and it would be nice to avoid it, but for now, it directly translates

to the root Java package where the generated source files will end up. The files will not end up in one package,

but each component will use a different sub package from the specified root.

It is possible to specify different root packages for different components. To do this, just issue a new

output declaration before the definition of the component of interest. The compiler will complain if no

output declaration has been made before a component that produces output (library definitions do not necessarily

produce code).

The fact that the output declaration translates directly to a Java concept is not something I

envisage as causing problems when more languages are added, as the package concept will map (I expect) in one way or another

to any language I am planning on adding.

The Import Declaration

While it is possible to put all the parser components into one file, it is not necessary. On large projects, it

may well be wise not to, in fact. To utilise the components declared in an external file, the import declaration is used.

The import declarations must be the first items in a file, and are of the form:

import namespace="Filename.austen";

The "namespace" is use for referencing external components within the file, and in general is used wherever a

component is referenced by prefacing the reference with the namespace name followed by a colon, ":". The follow example

shows the previous example split over two files:

TokensDefs.austen

output example.parser;

tokens ExampleTokens {

...

}

define library ExampleDefs {

...

}

Main.austen

import tokenDefs="TokensDefs.austen";

output example.parser;

lex ExampleLex tokens tokenDefs:ExampleTokens uses tokenDefs:ExampleDefs{

...

}

peg ExamplePEG tokens tokenDefs:ExampleTokens {

...

}

File storage/searching

External files can either come from the user (source) folder, or from the library folder. I'm not

sure if library folders are searched first, or user/source ones. You'll have to

check (behaviour will remain consistent in future versions...).

Comments

Comments in AustenX files follow the C-like language form. That is, there

are single line comments starting with "//", and block comments that start with

"/*", and end with "*/".

Tokens

The first step in creating an Austen parser is to define the tokenisation. This step itself can be broken down into two further components. The

first is a definition of what tokens are identified. This definition does not describe how the tokens are parsed. The second step,

which could in theory be provided by hand coding, or an external application, is scanner definition. This second step in Austen is

provided by a simple non-deterministic finite-automata based lexical analyser, in the tradition of that provided by Lexx,

and other similar programs.

Defining tokens

Token definition is provided in a tokens block. An example of such a block is as follows:

tokens Example {

String ID;

boolean BOOLEAN;

String STRING;

int NUMBER;

void PLUS_SYMBOL;

void MINUS_SYMBOL;

void WHALE_KEYWORD, STAR_SYMBOL, ASSIGNMENT;

String ERROR;

}

By my personal convention, token names are fully capitalised (with underscores to separate words). In the example above, a

list of tokens is given with the token type given first followed by the token name, followed (for this version) by a semi-colon;

A tokens block is just a list of all such tokens. A tokens block is marked by the keyword tokens followed by the name

of the block (in the above case, "Example"). The current valid types of a token are as follows:

- String, or string - a string of text

- int - an integer number (32 bit)

- double or float - a floating point number

- boolean - a boolean value that is either true or false

- void - no type (or token has no specific data attached)

As noted in the example, multiple tokens can be expressed at once, if they share the same type.

Library tokens

A library of tokens can be created to be reused in multiple token definitions. A library is defined in a similar way

to a normal tokens block except the key word library appears before the tokens keyword. So, for example,

the following is a simple library token definition:

library tokens Basics {

boolean BOOLEAN;

String STRING, ERROR;

int NUMBER;

}

A library tokens can be incorporated into a normal tokens block by having that block "extend" the library (hello Java),

such as in the following:

tokens Example2 extends Basic {

String ID;

void PLUS_SYMBOL;

void MINUS_SYMBOL, STAR_SYMBOL;

void WHALE_KEYWORD;

void ASSIGNMENT;

}

Given the definition of Basics, the definition of Example2 would essentially be identical with that

of Example. One cannot extend a non-library tokens definition, but multiple inheritences is possible, and a

library block may extend another library block. Again, I was a bit tricksy there, and included two token names on one line, which

is perfectly fine (as long as they have the same type).

Resulting generated code

When Austen is run with a tokens definition, some code is generated. For Java, this code will appear in

the sub package "lexi.tokens", from the base package (so if "output x.y;" occured earlier, the classes would appear

in the "x.y.lexi.tokens" package). There will be an "ErrorToken" interface, and a general token descriptor interface based

on the name of the tokens block (so, in the case of Example2, the resulting Java interface will be "Example2Token.java"). For

the most part, the end-user will not directly deal with these clasess.

Lexical analysis

Once a definition of the tokens used has been created, the next step

in tokenisation will be to create the "lexical scanner" for such tokens.

Multiple scanners could be created, as well as ones by hand, or perhaps using a third party tool.

In fact, no scanner need be created in order to move on

the next step of creating a PEG parser, but the resulting parser would

not be very useful (as it would know what tokens it needed, but nothing would be able to provide them). This

section will describe the lexical analysis currently provided by Austen.

The Lex Block

In Austen a lexical analyser is defined within a lex block. This block will look similar to the following:

lex MyLex tokens Example {

initial normal;

ALPHA = {'a'-'z'}|{'A'-'Z};

DIGIT = {'0'-'9'};

STRING_ALPHA = ALPHA|DIGIT|' ';

ALPHA_HEAD = ALPHA|'_';

ALPHA_TAIL = ALPHA_HEAD|'_';

mark baseMark;

mode simpleComment {

pattern ( "\n" ) { newline; switch normal; }

pattern ( . ) { }

}

mode normal {

pattern ( "+" ) { trigger PLUS_SYMBOL; }

pattern ( "-" ) { trigger MINUS_SYMBOL; }

pattern ( "*" ) { trigger STAR_SYMBOL; }

pattern ( "//" ) { switch simpleComment; }

pattern ( "\n" ) { newline; }

pattern ( {"\t"|" "} ) { }

pattern ( "true" ) { trigger BOOLEAN; }

pattern ( "false" ) { trigger BOOLEAN; }

pattern ( "whale" ) { trigger WHALE_KEYWORD & ID="whale"; }

pattern ( '"' $-start STRING_CHAR* $+end '"' ) {

trigger STRING = range(low=start,high=end);

}

pattern ( ALPHA_HEAD ALPHA_TAIL*) {

trigger ID;

}

pattern ( DIGIT+ '.' DIGIT+ ) {

trigger REAL;

}

pattern ( DIGIT+ ) {

trigger NUMBER;

}

}

}

The above example has a lot happening in it, so let's start with the first line:

lex MyLex tokens Example {

The lex keyword marks the start of the lexical analysier definition, and the following identifier is the name

(in this case "MyLex"), this is followed by the tokens keyword which is then followed by the name of the

tokens definition (in this case, we will use the "Example" tokens block described earlier). There is a little bit more

that can come after that, but we'll leave that to later. Let's turn now to the next line:

initial normal;

First, the semi-colon is (usually) optional; I just use it as force of habit. The main point of the initial declaration is

to tell Austen what "modes" the analyser starts out in. In this case, we've selected "normal" (which is defined later). I

chose to write mode names in lowerCamelCase. It is possible to list multiple starting modes, seperated by commas. I'm

not sure what the practical use of this might be, but it's there if you need it.

The next bits are where the important stuff happens. Patterns matched are defined within "mode" blocks,

which are "modes of parsing". Different modes allow the analyser to interpret text different depending on

what mode the anlyser is currently in (and due to NFA nature of the analyser, it can be in more than one mode - though

this is probably not something one would take advantage of).

The first mode defined is the "simpleComment" one, as follows:

mode simpleComment {

pattern ( "\n" ) { newline; switch normal; }

pattern ( . ) { }

}

The block starts with the mode keyword and is followed by the mode name, and then the usual curly brackets to

surround the mode definition. Within the mode block are a series of pattern delcarations, marked by the pattern keyword.

A pattern declaration is of the form:

pattern ( regular expression ) { actions to do on match }

In the above example, the first pattern matches "\n", which means the new line character (so the unix one - I'll get

to a better solution later). The actions, on matching a new line character are first, to mark a new line,

with the built-in newline command. The purpose of the newline command is to reset the internal line and character

counters, which are stored with each token. The second command is the switch command, which instructs the analyser to

switch modes, which in this case, is to the "normal" mode.

Triggering a token

Matching a token does not mean it is automatically stored. All the pattern matches in the "simpleComment" mode do not

store the matched text in any form. This is not surprising, as you've probably guessed, the "simpleComment" mode is somehow

linked to reading user comments, which a compiler probably is not interested in. The command that actually indicates that

a token should be stored is the trigger command. There is a few things to this command, but let's begin with a simple

example from the "normal" mode given above:

pattern ( "*" ) { trigger STAR_SYMBOL; }

In this example, the asterix, or "star symbol", has been matched, and the action uses the trigger command to

"trigger" the "STAR_SYMBOL" token, as defined in the tokens definition "Example". Looking back at the definition for "STAR_SYMBOL",

the type is void, so none of the input text is stored, but a token object is appended to the current list of tokens. All

tokens currently include information on the line number and character number (within the line) that the token was matched

at.

Trigger just means adding the specified token to the current list of tokens, but there is a little more to it than that.

First, a single match can trigger mutiple tokens in serial. That is, we could have written:

pattern ( "*" ) { trigger STAR_SYMBOL; trigger PLUS_SYMBOL; }

This would mean that for every "*" in the input, two tokens are added to the list of tokens. Furthermore, we

can add multiple tokens concurrently, or to be more honest, we can trigger a token with multiple "personalities". This

is done by using one trigger command but specifying multiple token names, such as the following:

pattern ( "*" ) { trigger STAR_SYMBOL, PLUS_SYMBOL; }

or...

pattern ( "*" ) { trigger STAR_SYMBOL & PLUS_SYMBOL; }

Using either a comma-seperated list, or an ampersand-seperated one is both acceptable. In either case, what is meant is

that the token triggered can be treated as either a "STAR_SYMBOL" or a "PLUS_SYMBOL". This combining of tokens is more

useful with keywords, as keywords can also be identifiers. So for example, the "whale" keyword is handled as:

pattern ( "whale" ) { trigger WHALE_KEYWORD & ID; }

The point of doing this will not become truely clear until later when we look at the PEG parsing component,

but essentially, it is to aid in providing some of the power of scannerless parsing that PEGs normally use, even

when using a scanner. As will be seen, PEG parsers, even with a seperate tokenisation step, can handle keywords in

a language as, for example, an identifier, depending on context (so in Java, with PEG parsing, a class could be

called "class" or "java", without too much trouble). Moreover, if we are combining languages, we can allow

keywords in one language, to be used as identifiers in another.

Assigning values with triggers

In the previous example, with the "whale" keyword, in the original example the line was actually as follows:

pattern ( "whale" ) { trigger WHALE_KEYWORD & ID = "whale"; }

The use of the assignment at the end of the trigger is important, especially when using tokens of type "void". For

non-void tokens they are assigned a value based on the parsed text. If the type is a non-string type then the assigned

value will be converted to the type specified. If a trigger is given for more than one token at once, and one is

void then it will not store the parsed text. The use of the assignment sets the value to a constant (in the

above example, "whale"). Otherwise, when that token is read as an "ID" it would be read as "void", and not "whale".

Ideally, one ought to assign constant values to all void tokens. (Future versions of Austen will do this

automatically for non-variable pattern matches; eg "cat", versus "ca*t", as explained later.)

General regular expression operators

Okay, we have described the basic matching of set text; to do this, just use the text to match surrounded by

double quotes. Regular expressions for pattern matching is usually more complex than that though, and the lexical analyser

in Austen is no different.

Matching single characters

First, it should be noted that single characters can also be matched by placing them

in single quote marks. You can match a double quote this way, and a single quote can be matched within a double-quoted

string (single quotes should really just be for multi-character strings like double quotes, and I suspect they will

be in the near future). You can also match escaped characters, such as '\n', to match new line characters (see above),

as well as provide explicity unicode values, just by using \45,for example, to match the character with code 45 (no

quotes needed, but can be used).

Next, it can be noted that any character can be matched with '.' (that's a full-stop, without the quotes).

Ranges can be matched by seperating them with a minus symbol. That is, 'a'-'z' matches all lowercase letters.

Matching repetitions

To match repetitions, as per normal, there is the "+" and "*" operators, which match one or more, and zero or more

respectively. That is, to match one or more of the first lowercase letter of the alphabet, use 'a'+.

The range operation (the minus bit) has higher precedence (see below, so to match all lowercase letters, zero or more times, use

'a'-'z'+.

Matching possibilities

If something is optional, use the "?" operator, or the "maybe" operator. For example, "cat" "dog"? "cat"

means match "cat" (must), followed possibly by "dog", and then certainly "cat" again (so "cat dog cat" or "cat cat").

If something must be matched, but it can be one of a number of options, than the "|", or "or" operator can be used.

This operator just seperates a list of possibilities. The longest match (and first in order) is the one used (it is not

a greedy match). So "cat" |"dog" |"frog" matches either "cat", "dog", or "frog". If "hell" | "hello" is used

on text "hello", then the token is parsed as "hello".

Combining operators,

one could use {"cat" |"dog"}+ |"frog", which means match one or more of either "cat" or "dog" (so,

"catcatdogcatdog" would match, or just match one "frog". But wait! What is with the brackets you ask? I don't get

that last example! Read on.

Blocks

To block matching elements together, just enclose them in "curly" brackets. That is, {'a' 'b'}+ means

match, one or more times, an 'a', followed by a 'b' (of course, you can just write that as "ab"+).

Another example would be {"cat" "dog"?}* "cat", which would match "cat", with a possible "dog", zero

or more times, followed by a final "cat" (so in this case, for every "dog" there must be a preceeding "cat", but for every

cat there need not be a "dog", and we must finish on a "cat").

Defines (or macros)

You may call them "macros", I have called them "defines", which may not be the best word, but mostly, it does matter. The point

of a define is to allow easy reuse of commonly used patterns. Patterns are stored in a define, and then just

used within the regular expressions by simply using the define's name. First, to define a define (macro):

ALPHA = {'a'-'z'}|{'A'-'Z};

Defines are placed with in the lex block, as root elements (that is, not within "mode" blocks). Defines can

refer to other defines (order is not important).

A simple example of the use of a define in the example lex block is:

pattern ( DIGIT+ ) {

trigger NUMBER;

}

This just means, a "NUMBER" token is triggered by one or more digits (which are anything between '0'-'9'). A more complex

example, which uses defines referencing defines (if you backtrack through the definitions) is:

pattern ( ALPHA_HEAD ALPHA_TAIL*) {

trigger ID;

}

Triggers, token types, and match text

Depending on the type of the token (as defined in the tokens block), data may be stored with

each token. The line number and character number of the token is stored with every token, but if the

token type is not void then data relating to the string matched for the token, is stored with

the token. If the type is int, this data is stored as a 32-bit integer, if it is string

then a string is stored (and so on).

Normally, the data stored is derived from the entire matched text,

and will be parsed according to type. That is, in the Java implementation, an integer value will be

obtained by calling Integer.parseInt() on the matched string. Sometimes though, only segments of the

matched string are required, and sometimes, values might want to be given directly. Another possibility is

that a different way of converting a string to an alternative primitive type might be required; currently, Austen

offers no way to do this, but rest assured, it is a very high priority, and will be incorporated soon (along with

the ability, hopefully, to define and handle more complex datatypes).

Going back to what Austen can do, the first thing that is useful, is being able to select portions of the matched

text to be used for the trigger. This is seen in the example in the handling of strings in the input (strings being

things in quotes here). In particular, examine the following:

pattern ( '"' $-start STRING_CHAR* $+end '"' ) {

trigger STRING = range(low=start,high=end);

}

In this case, the "STRING" token is being triggered, but the supplied data (indicated by the "=") is given as

a range between two points. Unfortunately, things get a little complex here. the position variables can be

placed in the matching expression, by marking them with "$".

The definition provided is actually a bit of overkill, so we will It would rewrite it as the following, returning to

the extra bits later:

pattern ( '"' $start STRING_CHAR* $end '"' ) {

trigger STRING = range(low=start,high=end);

}

In this case, "$start" means, set the "start" variable to the cursor position of the next read character, when

the analyser has progressed this far. The same occurs for the "end" variable, with "$end". In between these two

markers we match zero or more string characters, and it's this bit that we are interested in — not the double

quotes on the end. So, in the trigger command we set the trigger data to the range specified. This is

a built-in function (possibly the only function) that takes two parameters. Parameter order is not important,

as functions here only allow named parameters (I think — if not, I am not going to tell you because I would

rather you used the named form), where the "low" parameter is the beginning point, and "high" parameter is the end point (plus

one). The "$" is only used with variables within the regular expression.

Minimum/maximum match.

Returning to the way the "STRING" trigger rule is originally written it can be noted that the position variables

have an addition "+" or "-" mixed in (that is $-start, and $+end). The

astute amongst you may wonder what happens if the position variables are placed within in a block that could

be repeated multiple times. If the "-" is attached, the minimum position will be stored, if the "+" is attached,

the maximum position will be stored. I will not tell you the default behaviour (but I suspect it is the maximum

option), because it would be good form to put them in regardless (as I have here, even though it makes no difference,

as there is only ever one match for each position variable.

Referencing variables (version 1.1)

As of version 1.1 of AustenX, variables referenced in lex expressions may or may not have a "$" at the start. This

is to ensure consistency of appearance with the PEG use of variables (without breaking version 1.0 code). So, for example, the following is also valid:

pattern ( '"' $start STRING_CHAR* $end '"' ) {

trigger STRING = range(low=$start,high=$end);

}

Pattern Actions

The "trigger" command is an example of a pattern action — the things that happen when a pattern is

matched (or in, start/end blocks). There are a number of other action

commands that may be used. In all cases the semi-colon at the end of the action is not required.

The switch action

One action mentioned so far is the "switch" command. This allows the user

to switch the finaite state automata to a different mode (or modes). It consists of

the keyword "switch" followed a the name of the mode to switch to. Currently only one mode

is allowed to be used at a time, but I recall an earlier version being able to switch to multiple

modes — maybe this will reappear in a future version.

The print/debug commands

Two commands are supplied to allow printing of information while doing the lex parse, for the

intended purpose of debuging. The format for print is "print" ( [expressions] ), where [expressions]

is a comma seperated list of suitable expressions print (the most straightforward being "strings"). For debug, it use "print" ( [expressions] ).

Both debug and print work the same, except debug prints via the simple Debug framework in the Solar project, that basically prepends class

and line number of the caller to the outputed text. For example:

pattern ("cat") { print("cat",1); }

pattern ("dog") { debug("dog"); }

The "if" statement (v1.1 on)

From version 1.1 of AustenX there is also an "if" statement, which works roughly like "if" in most

langauges (certainly Java). The format is "if ( [expression] ) [statement]

, followed

by an option "else" clause (eg,

"else" [statement] ). For example:

pattern ("cat") {

if(utils.firstCat()) {

trigger ID="cat1"

} else

trigger ID="cat2";

}

Note, the use of utils.firstCat() is explained in the section on types and variables.

Statement block (v1.1 on)

New with version 1.1 is the ability to block statements by surrounding them with curly brackets (like

c-like languages). This only really has an effect for the "if" statement (see above).

The "mark" and "reverse from" actions (v1.1 on)

The marking mechanisms are discussed later.

The "newline" action

This is described elsewhere, and advances the line marker by one, and resets the character marker.

Method calls

With the introduction of class definitions, it is now possible to

interact with user code directly in the lexical (and packrat) parsing. This follows a similar format

to other languages, except in passing parameters. Essentially, a method is called by naming

the related variable, a full stop symbol, and then the name of the method to call, followed

by the parameters in brackets (like one would do in Java). Consider the following example:

class Test {

void meow();

}

lex MyLex(Test t) tokens Example {

...

pattern("cat") {

trigger ID="cat";

t.meow();

}

...

}

In the above example, the "meow" method was called on the "t" variable, when the pattern "cat"

was matched. When the method has parameters, those can be supplied in any order but require

tagging with name (like Rust). So, with parameters, an example would be:

class Test2 {

void woof(int x, String name);

}

lex MyLex(Test2 t) tokens Example {

...

pattern("cat") {

trigger ID="cat";

t.woof(name: "cat", x: 4);

}

...

}

Order and matching

Patterns are matched to the longest match, and if the same length, to the first defined. If a rule is never

matched, because prior rules cover all possibilities, than an error is generated. For example:

pattern ( "He" "l"+ "o" ) {} //Rule A

pattern ( "Hell" "o"? ) { } // Rule B

pattern ( "Hell" ) { } // Rule C

In this case, the text "Hello", would be matched by rule A, even though rule B can match it too (because rule A is defiend

before rule B), and rule C would generate an error, because it will never match anything, because "Hell" is "caught" by rule B.

If the order of rule C and B is swapped the compiler should then reject rule B, because all strings that B matches ("Hell", and "Hello")

are matched by previous rules (A for "Hello", C for "Hell").

Modularity for lexical analysis

There are two parts to modularity for the lexical analysis. The first is around libraries for define macros. The second

is for the pattern matching itself.

Define macro libraries

To create a library of define macros that you might want to reuse in your projects, you can create a define library block. An example

of such a thing is as follows:

define library GeneralDefs {

STRING_ALPHA = {ALPHA|DIGIT|" "|"'"};

ALPHA = {{'a'-'z'}|{'A'-'Z'}};

DIGIT = {'0'-'9'};

ALPHA_HEAD = {ALPHA|'_'};

ALPHA_TAIL = {ALPHA_HEAD|DIGIT};

NEWLINE = {"\r"|"\n"|"\r\n"};

}

The above define library specifies the define macros used in our example lex block. Sneakily placed within that define library is a better pattern for matching new-line characters, which will work

across all platforms. To substitute

them in, we use the uses keyword in the lex definition. That is, we would change to the following:

lex MyLex tokens Example uses GeneralDefs{

...

}

More than one define library maybe referenced; they just need to be seperated by commas (after the uses keyword).

Pattern libraries

As libraries of define macros can be created, so too can libraries of patterns matched (and their resulting operations).

To do this we use a patter library declaration, such as:

pattern library Strings tokens Example uses GeneralDefs {

pattern ( '"' $-start STRING_CHAR* $+end '"' ) {

trigger STRING = range(low=start,high=end);

}

}

Basically, what goes within a pattern library is what would go within a mode block within a lex

declaration. You cannot, yet, create libraries that have more than one mode. Like lex declarations, a

pattern library is connected with a tokens declarations, and may use external define libraries, like

a lex block.

To include a pattern library within a lex declaration is slightly different to other modular features,

where the inclusion is done in the "header" of the declaration. Because order is important, inclusion is done "inline" so

as to indicate which matches are of higher importance, and which are of lower importance. We do this with the

library keyword, and an example would be to switch in our "Strings" library into are well worked example lex block as

follows:

lex MyLex tokens Example uses GeneralDefs {

...

mode normal {

...

pattern ( "whale" ) { trigger WHALE_KEYWORD & ID; }

library Strings();

pattern ( ALPHA_HEAD ALPHA_TAIL*) {

trigger ID;

}

...

}

}

Thus, the important bit is:

library Strings();

You will notice the two round brackets, which make things look like parameters can be passed. This is explained later

in the section on user variables.

The last final thing to note is that the tokens used by the pattern library must be compatible with the

tokens used by the lex declaration. Remembering that a tokens declaration can extended other tokens

declarations, the pattern library must either use the same tokens block as the lex block, or one of the

extended library tokens block. Pattern libraries, unlike lex blocks, can use library based tokens declarations.

Multiple matches

One last thing that needs to be mentioned is what happens when more than one rule matches some text. Like usual

lexical analysers, two things that matter are firstly, what pattern matches the longest bit of text, and second, what pattern

came first. So basically, assuming I have some input text "cats like hats", if I have one pattern that matches "cat", and one that matches "cats", the one that matches "cats" will

be the one used, regardless of order. If I have two patterns that match "cat" (but not "cats"), then whatever rule is listed first is the

one that is used. This is why library patterns are specified inline - where the library reference goes indicates what patterns should

overide the library ones, and what patterns are overiding by the library.

Lastly, one final note of importance. If one pattern complete occludes another pattern from ever being matched, Austenx will currently

stop and produce an error. Thus, the following will produce an error:

pattern ("c"? "a"* "t" "s"?) { }

pattern ("cat") { }

This is because on the text "cat" the second pattern is never used (and that's the only thing it can match), because

the first rule will match "cat" first. The following is not an error:

pattern ("c"? "a"* "t" "s"?) { }

pattern ("cat" "s"*) { }

This is not an error, because "catsss" is matched by the second rule, but not by the first. Also, this further example is

not an error:

pattern ("cat") { }

pattern ("c"? "a"* "t" "s"?) { }

The above example, seems very similar, but the order is changed. Now, if the input is "cat" (and not "cats"), then

the first pattern will match (and so will the second), but the order counts, and the first is taken. The second pattern is

not completely occluded by the first, as there is other input it can match, not covered by "cat" (eg. "aaaats").

Advanced lexical analysis

This section details more advanced features of the lexical analysis (introduced from version 1.1 onwards).

Marks and token reversing

New as of version 1.1 are tools for marking tokens and reversing tokens. The point of this is

to allow right-to-left parsing, in a left-to-right framework (or perhaps more likely, top-to-bottom one).

By that, I mean reading right-to-left text that has be delimited by new lines (that go down the

page), or having sections within the language that switch direction. The facilities required for this

are the mark declaration and setting, and the "reverse from" action.

Declaring marks

To use a mark it must be declared first. This is done outside of modes, at the "root" of the lex

definition (see the original example, for a declaration of a "baseMark" mark". The

format of a mark declaration is just the "mark" keyword, followed by a comma-seperated list of mark

identifiers (more than one mark declaration can occur if that is preferred). So for example (the semi-colon is optional):

mark happy, sad;

Setting a mark

Setting a mark occurs inside an action/statement block (like the pattern match actions). It

uses a similar syntax to the mark declaration (except currently only one mark identifier per mark

statement). So for example:

pattern ("cat") { mark happy; }

pattern ("dog") { mark sad; }

Reversing from a mark

Again, the reversing from a mark statement occurs inside an action/statement block, like setting a mark.

It has the rather verbose syntax of "reverse" "from" [mark name], so for example:

pattern ("cat") { mark happy; }

pattern ("dog") { reverse from happy; }

The above example would place a mark when "cat" was matched, and then, when a "dog" is matched,

reverse all the intermediate tokens.

Start and end blocks

New as of version 1.1 are some additional elements of a lex block for doing actions outside of

matching text. Currently there are two optional blocks for specifying what should be done at the

start of parsing, before any characters are examined, (the start block) and what is to be done at the end when

all characters have been matched (the end block).

Usage

Start and end blocks appear outside of modes at the moment (this may change in the future),

and currently should not include an "switch" statements to change modes. They follow the format

"start" { [actions] }, where [actions] is a set of actions (like "trigger"

etc), as would appear in a pattern match. So for example:

lex MyLex tokens Example {

initial normal;

...

end {

trigger ID="END OF FILE!";

print("The end!");

}

mode simpleComment { ... }

...

start {

trigger ID="Start OF FILE!";

print("It begins!");

}

}

Note, start and end blocks can be defined after or before other elements, and multiple blocks

can be defined - they will just be executed in order in the final generated code. Currently,

they probably do not work in library blocks (future feature!).

User supplied variables

An important feature added to lex (and packrat) parsing in version 1.1 is the ability to pass

information to the parsing processes from the calling user code. The basic type of variables

are "primitive" ones that are just string, integer, boolean, or double values. With lex blocks

the user supplied parameters are specified at the start of the lex definition (similar to how a

method/function in c-like languages might do it). So for example:

lex WithArgs(int x, string y) tokens Example {

...

}

In the previous example, the lexical analysier called MyLex takes two parameters: a interger

called "x", and a string called "y". Variables in this case are typed (and the names follow

what is used in Java, though one can use "string", or "String" for string types). This changes the

user generated code such that, with Java code generation, the generated WithArgsLexParser class has the following

constructor:

public class WithArgsLexParser {

...

public WithArgsLexParser(int xValue, String yValue) {

...

}

...

}

The supplied values can then be used in the parsing action blocks within the lex parser (eg,

the matching actions, or in the start/end blocks). One simple example using a boolean variable

might be for conditional actions to be taken in parsing, based on user code, not at compile time.

So for example:

lex Conditional(boolean doCat, String catText) tokens Example {

...

mode SomeMode {

pattern("cat") {

if(doCat) {

trigger ID=catText;

}

}

}

}

The above example uses two uses of supplied values. The first is for the if statement, and the

second is the text used in the token for the trigger statement.

Note that user variables cannot be used in the actual matching as the state machine

that does the matching is created at compile time, not run time.

Referencing variables (version 1.1)

As of version 1.1 of AustenX, variables referenced in lex expressions may or may not have a "$" at the start. This

is to ensure consistency of appearance with the PEG use of variables (without breaking version 1.0 code). So, for example, the following is also valid:

pattern("cat") {

if($doCat) {

trigger ID=$catText;

}

}

Complex types

Where the user supplied variables comes most into their own is with regards to complex types, .

Complex types are defined in Austen through the "interface" mechanism (see the section on user interface definition for how

to define a interface). If a interface has been defined, and a variable supplied of that interface type,

then the methods of that interface can be accessed in the action sections of the lex parsing. This

is very useful as it provides a way to add a lot of functionality that may otherwise be

missing from Austen, and allows the flexibility lost by making Austen language independent.

User interfaces describe types with methods that can be called, so variables of user interface

type can have their methods accessed in the action blocks. Again, the syntax follows Java. So,

for example, consider the following interface and usuage:

interface Happy {

boolean flipCoin();

int convertHex(string base);

int add(int first, int second);

void notifyOfCat();

}

lex Complex(Happy h) tokens Example {

...

HEX_DIGIT = {{'0'-'9'}|{'a'-'f'}|{'A'-'F'}};

mode SomeMode {

pattern("cat") {

h.notifyOfCat();

if(h.flipCoin()) {

trigger ID;

}

}

pattern("0x" $start HEX_DIGIT+ $+end) {

trigger NUMBER=h.convertHex(base: range(low: $start, high:$end));

}

}

}

The above example illustrates two possible uses for complex types. We shall break it

down by first looking at the components used. First, an interface "Happy"

is defined. The lexical analysiser "Complex" is defined with one parameter, "h", being of type

"Happy". From a code generated point of view. This means, a "HappyUser" interface will be generated:

public interface HappyUser {

public boolean flipCoin();

public int convertHex(String base);

public int add(int first, int second);

public void notifyOfCat();

}

... and the created lex parser class will have a constructor like so:

public class ComplexLexParser {

...

public ComplexLexParser(HappyUser hValue) {

...

}

...

}

So, in order to use the ComplexLexParser, the user programmer must supply a an object that

implements the "HappyUser" interface.

Now, during the lex parsing, the generated parser code will make calls to the HappyUser instance

provided, enabling interaction with user code during the parsing process. Thus, the "flipCoin"

method will be called whenever "cat" is matched (and parsing will act accordingly), and "convertHex"

is called (apparently to convert hexadecimal numbers into integer values).

The method "notifyOfCat()" is called not as an expression, but as a command. Other than that, it

functions the same as other method uses.

Passing argument values

The only thing to note is the syntax for passing argument values to methods that require them.

This follows the approach taken for internal functions (eg "range"), where parameters are referenced

by name (so order does not matter). This can either be in the form "parameterName" "=" "expression", or

in the form "parameterName" ":" "expression". Unnamed values are not currently accepted.

Note also that the values passed can be reasonably complex expressions, including other calls to

methods/functions. So the following is valid:

pattern("4x" $start HEX_DIGIT+ $+end) {

trigger NUMBER=h.add(second: 4, first: h.convertHex(base: range(low: $start, high:$end)));

}

(Note, that the "add" method is used above because no "+" functionality current exists within

AustenX. There is only so far down the "making it a programming language" road I feel I need to

go at the moment, when the rare uses for such functionality can be covered by the complex type and

method approach).

System variables

From version 1.1 there are a few extra supplied system variables (which will be replaced by

user variables of the same name). They just allow access to some of the data stored during

the parsing process. They are as follows:

- line, which returns the line number of the next token to be triggered (type int)

- char, which returns the character number of the next token to be triggered (type int)

- length, which returns the length of the current matched string full text (should

only be accessed during pattern match action blocks, not the start/end ones) (type int)

- text, which returns the full text current matched string (should

only be accessed during pattern match action blocks, not the start/end ones) (type string).

Variables and pattern libraries

Pattern libraries can also include system variables. To do this, the context in which that library is

used must provide the values for each variable. So, for example, one could define a library as such:

pattern library Cat(string catText, int number) tokens Example {

pattern ( "cat" ) {

trigger NUMBER=number;

print("Cat:", catText);

}

}

To use the library, values for the variables must be passed in the same named way that arguments are passed

to method calls. So for example:

lex MyCatUser tokens Example uses GeneralDefs {

...

mode normal {

...

pattern ( "whale" ) { trigger WHALE_KEYWORD & ID; }

library Cat(catText: "MEOW", number: 4);

...

}

}

Lazy evaluation

An important thing to note is that the values passed are lazily evaluated, so if a method call is passed as a value,

that method is called each time the library uses that variable. So for example:

interface Dog {

string dogText(int x);

int dogNumber(string x);

}

lex MyCatUser(Dog dog) tokens Example uses GeneralDefs {

...

mode normal {

...

library Cat(catText: dog.dogText(x: 5), number: dog.dogNumber(x: "woof"));

...

}

}

In this case, each time "cat" is matched (by the Cat library) a "NUMBER" token is triggered, with the

value of parameter "number", except this is provided by the evaluation of "dog.dogNumber(x: "woof")", and

this evaluation is done for each and every time "cat" is matched. Which means, if "cat" is matched 10 times

in a parse, then the "dogNumber()" method of the supplied "Dog" instance will be called 10 times.

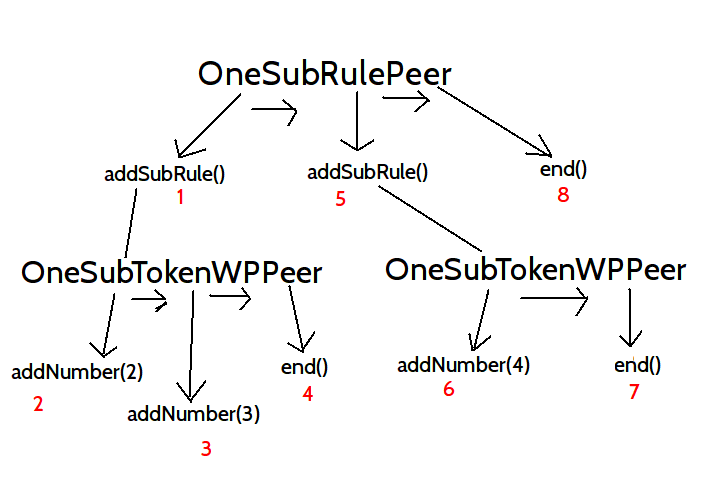

Parsing Expression Grammars(PEGS)

Lexical analysis is cool and all, but every dog and their octopus can do that, and probably better

than Austen. Where Austen aims to gain "oos" and "aas" is with its gramatical parsing through

Parsing Expression Grammar, or PEG, based parsing. First though, before we go into how to write an Austen PEG parser, a explanation of PEG parsing is required,

and the ideas of recursive descent that underpin it.

What is a PEG Parser?

Parsing Expression Grammars (Ford) are a way of describing of understanding the contents of a file (or rather a set of tokens).

It is based on a framework of describing how to read information, in a "greedy" way, rather than the often used

approaches which are based on a language rooted in text generation (eg, context free grammars). This makes a

lot more sense to me, which is why I think PEGS are cool.

Having said that, if you have used a generative based parser before (like Bison/YACC, or something similar)

than the syntax of PEG rules will seem very familiar; they only differ in subtle, but important ways. There is

also a certain similarity with the NFA based lexical analysis, and other regular expression languages, but

again, things are a little different.

The most basic expressions

A PEG is a set of parsing rules. A rule is a set of expressions (parsing expressions if you will). The most

basic of the expressions is a single character (for most PEG parsers), or in Austen's case, a token. So a rule might be:

HappyRule := ID

That's not Austen syntax (I'm just harking back to the good old days of Pascal),

but basically it says that HappyRule matches the token ID. Obviously

this is not very useful, so the next expression is the "series" expression, which is

just things being listed in match order. So, for example:

ZebraRule := ID NUMBER STRING

In this case, ZebraRule is matched when an ID token followed by a NUMBERfollowed by

a STRING is encountered. The next expression type is similar to the token, and is just another rule. So for

example, we could have:

ZebraRule := HappyRule NUMBER STRING

This version of ZebraRule functions to match the same input as

the previous version, but does so by referencing HappyRule. HappyRule

has to match for ZebraRule to match.

Now we have described the two basic expression types, we can talk about

how the parser algorithm works.

Greediness and Recursive Descent

The goal of the parser is to

find a rule that "comsumes" the input starting from a particular token. If we

imagine a cursor sitting at particular token, a rule expression consumes

zero or more tokens of input, leaving the cursor moved to after the last

token consumed. A rule is said to succeed if all the expressions of

that rule successfull consume input. If any expression fails to match the

input (and cannot consume it), than no input for that rule is consumed, and

the cursor returns to the point when the rule was first tested.

This process of consumption can be converted in a straight forward

way to computer code. For example, HappyRule might translate as:

function HappyRule() {

markCursor();

//consumeX() is a set of functions for each token X that

// return true if they consume the token at the current input,

// false otherwise.

if(!consumeID()) {

//Set cursot back to start

backToMark();

return false;

}

//Successful, so remove mark for got form

removeLastMark();

return true;

}

Given the definition of HappyRule, then ZebraRule might

follow as:

function ZebraRule() {

markCursor();

if(!HappyRule()) { backToMark(); return false; }

if(!consumeNumber()) { backToMark(); return false; }

if(!consumeString()) { backToMark(); return false; }

//Successful, so remove mark for got form

removeLastMark();

return true;

}

This is a nice translation from a parser rule to actual code, and

easy to understand (it roughly follows what happens within the code generated by AustenX). Note

the use of backtracking the cursor when a consumption fails.

Also of note is the call to HappyRule from within ZebraRule, and this is where things get interesting.

Repeated and possible patterns.

In the previous examples we have just matched either tokens, or other patterns. Like the lexical analysis there

are other operators available. Some of these are similar to regular expressions, such as the "*", "+", and "?" operators,

which perform the zero or more, one or more, or maybe, operations. So for example, a possible PEG pattern might be:

Strange :=

NUMBER PLUS_SYMBOL+ NUMBER?

The above matches a NUMBER followed by one or more "+" tokens, followed, possibly by another number. Where

this differs from the regular expressions is in the greedy nature of PEGs. The following example, which groups

items together as well, illustrates the consequences of the greedy consumption of input:

Strange2 :=

NUMBER PLUS_SYMBOL+ { PLUS_SYMBOL NUMBER } ?

The example Strange2 is similar to Strange, but after matching one or more "+" tokens, there

is the possibility of matching a "+" followed by a number (the "{" and "}" make a block, that the "?" operator

matches on as a whole). The problem is, the "PLUS_SYMBOL+" will consume all the "+" tokens, upto the first instance

of a token that is not a "+", so there is no "+" available for the last part of the pattern to match. If we

rewrite it one more time, we see the greedy nature of PEGs means some patterns cannot be matched:

Strange3 :=

NUMBER PLUS_SYMBOL+ PLUS_SYMBOL NUMBER

In the previous example, Strange3 would never match anything, as the second PLUS_SYMBOL must

be matched, but no appropriate input will be available for consumption after the "PLUS_SYMBOL+" has finished

doing its thing.

The "or"/"choice" operator

A core operator, and one where the greedy element of PEG parsing is so important, and also

one where some of the the inovations present in Austen are to be found, are with the "or" operator.

The "or" operator matches one of a set of possible sub patterns.

Traditionally, with PEG parsers, this is marked by using a "/" symbol, in place of the "|" symbol

used with other parsing approaches. This alteration in syntax is to emphasise the greedy nature

of the "or" operation, where the sub patterns are attempted, and the first one that successfully

consumes input is used, and no further options are tried. This is different to say the case

with NFA regular expressions used in Austen's Lex blocks, where the longest match is used (or rather

potentially used, if that gives the longest match for the overall pattern). I think this is

pointless, so I will use the traditional vertical bar, "|" (and AustenX uses the "/" for

another purpose). So, for example:

IdPlus := ID PLUS_SYMBOL

Options :=

IdPlus | { NUMBER PLUS_SYMBOL} | ID+

In this case, the pattern "Options" can either be matched by the pattern "IdPlus", or a number token followed

by a "+" token, or one or more "ID" tokens. It cannot be matched by more than one option, and the options

are tried in order. First, the pattern "IdPlus" is checked to see if successfully consumes input. If so,

then "Options" succeeds (and the cursor moves on), or else the cursor returns to the starting token (for this

resolution), and the next option is tried (the number and the "+"). If no option succeeds, than the pattern "Options"

fails.

Lookahead, non-consuming, operators

The next set of operators that PEGs use are a bit less common in the world of parsing, as they involve

"looking ahead" in the input, but not actually consuming input.

Followed by, "&"

The first is the followed by operator, marked

with an "&" before the elements to check (not after like with "+", "*", "?"). This operator checks that

the input following the current cursor position matches a set of pattern elements. So for example:

FollowedBy := ID & PLUS_SYMBOL

In this case, the pattern "FollowedBy" is successful if it consumes first an "ID" token, and then is followed by

a "+". Because the followed by "&" operator does not consume output, the cursor will be left after the "ID" (at

the point of the "+" token.

Not followed by, "!"

A similar operator, that is used a very similar fashion to "&" is the not followed by operator,

which again, does not consume any input, but succeeds if it fails to match the specified pattern. It is used

to check if something is not followed by a certain pattern. So for example:

NotFollowedBy := ID ! PLUS_SYMBOL

In this case, the pattern "NotFollowedBy" is successful if it consumes first an "ID" token, and then is followed by

anything but a "+" token. Because the followed by "!" operator does not consume output, the cursor will be left after the "ID" (at

the point of the "!" token. One can combine operators of course, so the following is valid too:

NotFollowedByMulti := ID ! { PLUS_SYMBOL | STAR_SYMBOL }

In this case, the pattern "NotFollowedBy" is successful if it consumes first an "ID" token, and then is followed by

anything but a "+" token, or a "*". (The grouping "{}" braces are not actually needed because of the

precedence of the "|".

| Expression | Meaning |

| id | A reference to another pattern, or a token (tokens by convention are all-caps,

patterns are CamelCaps. |

| e1 e2 | Sequential match: first expression e1 is consumed, then expressione2. |

| { e1 e2 } | Block match: consume expressions within block, but treat as an atomic block for further operations. |

| e? | Attempt to consume expression e consumed, do not fail pattern if unsuccessful. |

| e+ | Consume at least one expression e, and than as many more as possible. |

| e* | Consume as many of expression e as possible, or none. Equivalent to e+? |

| e1 | e2 | Consume either e1 or e2, trying e1 first (if successful,

e2 never tested). |

| &e | Is a successful match in a pattern if expression e is able to

be consumed from the current position in the input. Does not consume any input. |

| !e | Is a successful match in a pattern if expression e is not able to

be consumed from the current position in the input. Does not consume any input. |

Memorisation taster.

In the previous example, of a function that consumes input, there is a call to another function that consumes input. This raises

a couple of issues. The first is efficiency. It may well be possible that a particular rule may be invoked multiple times

at a particular cursor position, as different rules are applied (for example, consider an "or" operation), yet each call

will yield the same result, with the same computations. Is it possible to avoid this inefficiency? The answer is, yes, using a

dynamic programming solution, where a table rule resolutions at particular sites is stored, and this will be elaborated on later.

For the most part, the user of AustenX does not have to really understand the memorisation (if used), except that memorisation

is a tradeoff of memory for faster computation (potentially).

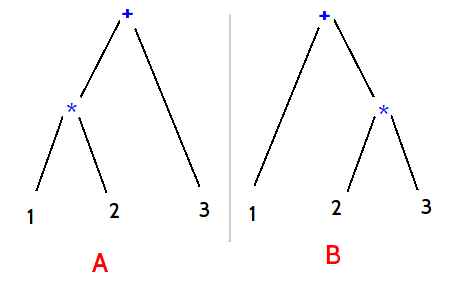

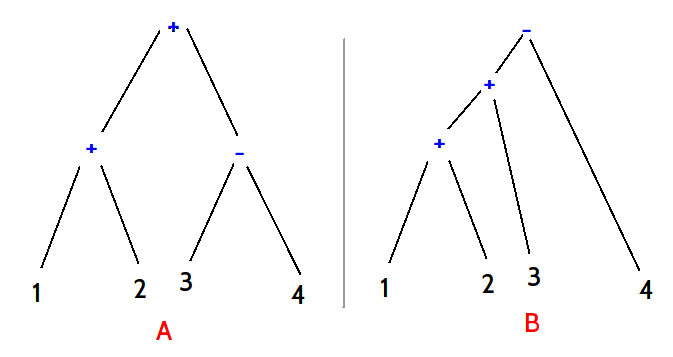

Recurssion

Return to the issues the example presents, there is a second one and that comes from recursive calls. It is, and should be, perfectly

sensible to have rules that refer to them selves. The prototypical example of this is with "expressions" in languages. A basic example is

the follow:

Expression :=

{ Expression PLUS_SYMBOL Expression } |

NUMBER

By this, Expression is a rule which consumes either the series Expression, followed by the PLUS_SYMBOL ("+"),

followed by another expression, or, just a simple INTEGER token is consumed. Remember the greedy nature of "or", if the first option

succeeds, the second is not tested.

Now, here is a problem if the recursion is "left". This can be seen if we look at what code might be equivalent to this rule:

function ExpressionRuleOptionOne() {

markCursor();

if(!ExpressionRule()) { backToMark(); return false; }

if(!consumePlusSymbol()) { backToMark(); return false; }

if(!ExpressionRule()) { backToMark(); return false; }

removeLastMark();

return true;

}

function ExpressionRuleOptionTwo() {

markCursor();

if(!consumePlusNumber()) { backToMark(); return false; }

removeLastMark();

return true;

}

function ExpressionRule() {

if(ExpressionRuleOptionOne()) { return true; }

if(ExpressionRuleOptionTwo()) { return true; }

return false;

}

This is a slighly more involved piece of code, and not quite how AustenX generates things, but you should get the idea. The

astute reader (that's you) will pick out though that there is a slight "quirk" in the code. ExpressionRule calls

ExpressionRuleOptionOne, without consuming input, and ExpressionRuleOptionOne calls ExpressionRule,

again without consuming any input. The result of this is that each function will continually keep calling each other,

and we are staring into the abyss of infinite recurssion. Note that this problem does not occur if the recurssion is

not "left" (that is, occurs after at least one token of input has been consumed). No left recurssion seems such a shame, because that is a nice way to write

a grammar, and it would be sad not to be able to write such rules. Thankfully, a number of similar solutions to the

problem have been found, and AustenX uses such a solution, so such recursions are perfectly acceptable. Unfortunately, the solutions have

quirks though regarding precedance, and do not always result in intuitive results. Thankfully again, AustenX is a bit cool, and

sorts that out too. Further details on that will have to wait to later in this document though.

Writing an Austen PEG parser

The above should have given the reader a brief overview of how PEG based parsers work. Don't worry if you didn't, as things

we will go over the idea again, but this time within the particular way Austen works.

There are more features that AustenX allows, and we will examine

those with greater detail as we proceed. First, we begin with an explanation of the basic syntax.

The PEG block

The PEG block is defined in a similar way to the LEX block. A basic example would be:

peg ExamplePEG tokens Example{

...

}

In this case, a PEG block named "ExamplePEG" is being defined, that uses the "Example" tokens (as the LEX

block parsed for). The tokens are the name of the tokens block, not the lex block. One could write

their own lexical analyser; the PEG parser does not care how the tokens were derived, just what form they take.

The peg keyword can be replaced with packrat, as historically that is what was used, but then

I realised that the parser has moved beyond being specifidcally a packrat parser, and may infact not use packrat

parsing at all (if no memorisation is used).

Inside the PEG block things get interesting. The typically element within the block is the pattern

declaration. Pattern declarations come in two forms: the basic pattern, and the option pattern. Both equate with

a general PEG pattern, but the option pattern has a specific set of options (choice options - like with the "|"

operator), that have specific meaning in how the parsing is performed, and what code is produced as a result.

Basic patterns

Both basic and option patterns are marked with the pattern keyword. A basic pattern is of the form:

pattern Name ( PatternElements );

That is, first the pattern keyword, then the name of the pattern (in normal identifier form), then

a round bracket "(", then the pattern elements then a closeing bracket ")", then an optional semi-colon (I try to

keep semi-colons optional, even though I like using them, as an example of the awesomeness of PEG parsing, and

the general lack of need for such things).

Option patterns

An option pattern is of the form:

pattern Name {

OptionOne ( PatternElements1 );

OptionTwo ( PatternElements2 );

...

OptionN ( PatternElements3 );

}

That is, first the pattern keyword, then the name of the pattern (in normal identifier form), then

a curly bracket "{", then the set of different options, followed by a closeing bracket "}". The individual options

(of which there can be one or more) are presented as for the the basic patterns, without the extra pattern keywords.

Again, the ending semi-colon in each case is optional.

An option pattern will match whatever option matches first. That is, the first option, in order presented,

that successfully consumes input, will be the pattern that matches overall. But, before more explanation can

be given on that, we should first attend to what the "PatternElements" are.

The Basic Pattern Elements

The pattern elements follow a similar form to the PEG language described previously. The most atomic element is the

pattern or token reference, which is just the name of the thing being referenced. If there is a pattern with the same

name as a token, the pattern will be detected first. Elements can be listed sequentially, such that each element is in

turn tested to consume input so the following is valid:

pattern Example2 {

Number ( NUMBER );

ID ( ID );

}

pattern Example1( ID STRING Example2 ID Example2 );

In the above example, the pattern example attempts to consume an ID token, then a STRING token then whatever

Example2 matches (in this case, either a NUMBER or and ID), another ID, and another Example2 (which may be different

to what was consumed by the first Example2 reference.

The choice operator "|", the zero or more ("*"), one or more ("+"), and maybe operators ("?"), as well as the

followed-by, and not-followed-by lookahead operators ("&", and "!" respectively), work as described in the section

on general PEGS. Elements can also be grouped as before by enclosing the elements in curly brackets ("{ }"). Thus, the following

is a valid Austen rule:

pattern Example3 ( Example1? Example2* STRING+ !ID Example1 | Example2 { STRING ID Example2 }+ );

Divided elements

The previous section described the direct translation of normal PEG expressions to Austen pattern definitions. There are a number of

further adaptions to the basic PEG structure that Austen uses, but most of those are non-trivial extensions, and are described later. One that is a relatively

simple, and acts as "syntactic sugar" (though it is compiled differently), is the divider operator, marked by "/", which is used in normal

PEGs to show the greedy choice option (which Austen uses the "|" for). The divider operator is useful for when one has

wishes to parse a list of items, such as one would do with the zero-or-more ("*"), or one-or-more ("+") opertors, but

wants the list to be seperated by some other items. In English, the obvious example is a normal list being seperated by commas

(cat, dog, moose), which is commonly transfered across to programming languages for listing items or parameters (a = [1,2,3]). Of note is

that the comma divides the items; it is not valid to have another comma after the last item, or before the first. Using

basic PEG grammar we could write an Austen pattern as such (hopefully the unmentioned named elements are obvious by

their name):

pattern FunctionCall ( ID LEFT_ROUND { Expression { COMMA Expression } * }? RIGHT_ROUND );

This pattern matches a "function call", which is of the form: function name, followed by a left round bracket,

followed by zero or more parameter values (from general expressions), seperated by a comma, followed by a right

round bracket. That is, a function call common to many languages. This form a little convolted, and not very easy to read.

The divider operator can be used to state the same form as follows:

pattern FunctionCall ( ID LEFT_ROUND Expression* / COMMA RIGHT_ROUND );

The "Expression* / COMMA" bit can be read as "zero or more Expressions, divided by a COMMA". Alternatively, if for some reason

you want one or more elements divided by something you could use something like the following:

pattern FunctionCallOdd ( ID LEFT_ROUND Expression+ / COMMA RIGHT_ROUND );

The divider does not have to be a single token, it can be any general element, so the following is valid too:

pattern FunctionCallOdder ( ID LEFT_ROUND Expression+ / { COMMA { LEFT_CURLY _RIGHT_CURLY }* / STRING }? RIGHT_ROUND );

If that does not make sense to you, it means one or more expressions divided, possibly, by a comma followed by zero or more "{}" couples divided

by a String token (eg "cat")). Also note, that legacy versions of Austen files (if you are looking through source

written by me), use the simpler version of the divider where the zero-or-more, or one-or-more bit is not present, such as: